Smart Storage System

ECE5725 Final Project

A Project By Joash Shankar (jcs556), Ming He (hh759), Junze Zhou (jz2275)

Demonstration Video

Introduction

Managing personal packages effectively is a daunting task, as conventional storage options frequently fall short in providing adequate security and organizational features, along with the needed technological integration to streamline package delivery and collection. Our Smart Storage System is crafted to revolutionize this process, offering a more secure and efficient storage solution. By integrating advanced technologies such as facial recognition, electronic tagging, and automated notifications, we automate the whole system with timer-related functionalities to elevate the package delivery/retrieval process and overall management, transforming the traditional way of managing packages.

Project Objective:

- Implement a design that leverages advanced technologies to provide a secure and efficient solution for managing the storage and retrieval of packages

- Facial recognition for valid/invalid users

- April tag scanning for packages linked to users

- Email notifications sending to users

- LEDs/sound to act as an indicator if the user is valid or not

- Servos to open the bins

Project Model

Home Page

Design

In this project, our system emulates the functionality of a real-life apartment package management system. It categorizes individuals into three distinct groups: authorized users, delivery personnel, and unauthorized individuals. The process begins when a doorbell button is pressed, accompanied by an audio cue signaling the initiation of the facial recognition procedure. The system is adept at distinguishing between these groups.

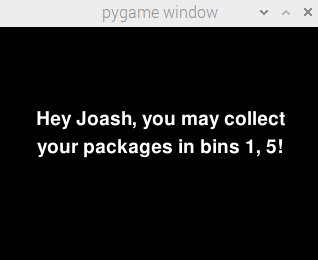

For authorized users, a green LED lights up, and the PiTFT screen displays relevant information, such as the presence or absence of their packages, the specific bin numbers holding their packages (if any), and an email notification is sent indicating they picked up their package. In the case of unauthorized individuals, the system responds with a message stating "Access Denied", highlighted by a red LED and an audio alert specific to invalid users.

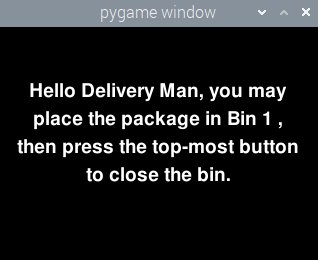

For delivery personnel, the procedure includes a green LED signal and on-screen instructions detailing the steps for scanning shipping labels, which are represented by various April tags, and placing packages into a bin chosen by the system. Once the delivery man places a user’s package, that user will receive an email notification that their package has been delivered. The system also incorporates an algorithm to manage situations where the storage bins are fully occupied. As part of this, if a user didn’t pick up their package within a specified time range, they will receive an email alert that they should pick up their package at a USPS store.

In summary, this innovative Smart Storage System aims to streamline and secure the process of package handling in residential settings.

Flow Chart of our System

As indicated in the flow chart above, for each iteration of main loop, the program will check if the "Program flag" is asserted. "Program flag" refers to our definition of four flags to control and handle our logic flow: "pkg_Delivered", "pkg_Picked", "out_time" and "invalid_user".

- "pkg_Delivered" is asserted when the delivery person has successfully delivered some packages.

- "pkg_Picked" indicates if a user has picked up their package.

- "invalid_user" implies an unknown person was visiting our system.

- For "out_time", our system introduces logic to handle different situations regarding timeout:

- If a user presses the start facial detection button, but the system can't detect anyone for a period of time.

- If the delivery person is detected while the April tag can't be detected for some time.

- If the delivery person and April tag are both detected, but the delivery person forgets to press the bin close button, the "out_time" flag will also be asserted.

Flow Chart of Delivery Personnel

As for the flow of the "Delivery Person", this flow chart illustrates the subprocess when a delivery person is detected. In the delivery logic, we implemented multiple mechanisms. In addition to the timeout handling logic described above, we also realized a mechanism that the system will automatically find the available bins and deal with packages that have not been picked up for a long time. Specifically, the system will record the time indicating how long a package has stored in the bin, which we defined as the "protection time" (time elapsed).

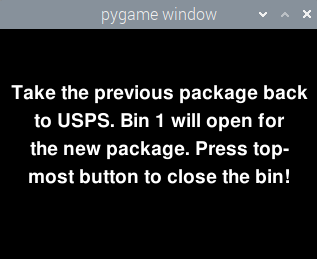

When the "protection time" of one package has exceeded the time limit, the package will not be "protected" anymore, indicating the time to pick up the package has expired and the delivery person should replace the package out of the bin with a new one (and the delivery man would bring the old package to the USPS store for that associated user to pick up later).

Software Design

The primary components of software include facial recognition, april tag scanning, and overall bin placement/retrieval logic.

Facial Recognition

For facial recognition, we detect and recognize faces in the video stream via the RPi camera through process_face_detection(). When called, this either a) searches for valid and invalid faces and moves on to the next step or b) times out if no faces are detected within a set timeframe. It does this by loading in the pre-saved facial encodings (from train_model.py) i.e. loads known users. While running for each frame, it detects faces and calculates their facial encodings. These encodings are compared against the pre-saved encodings to find matches, identifying if a face belongs to a known user or is unknown. 3 cases arise from this:

- If a known user is detected, display a message to welcome the user and indicate if they have package(s) to pick up or not and light the LED green

- If a delivery man is detected, start the AprilTag scanning process and light the LED green

- If an unknown user is detected, display a message that they are an invalid user, light the LED red, and play invalid sound

Face recognition process starts

April tag recognition process starts

Invalid user detected

April Tagging

Our system includes an innovative feature known as "April Tagging" which becomes active during the facial recognition stage, specifically when the delivery person is identified. April tags essentially serve as shipping labels, streamlining the package management process. In this process, each captured frame is first converted to grayscale - a technique that enhances the effectiveness of April tag detection. We then utilize a specialized detector, tailored for a specific tag family (in our case, tag16h5), to process these grayscale images for tag detection. For every April tag detected, we extract its unique ID. This ID not only allows us to visually represent the tag by drawing bounding boxes around it but also serves as a crucial function in our system. We use this tag ID to retrieve relevant user information from a pre-defined mapping (the 'tag_info' dictionary). The detection of a tag associated with a user triggers the bin-opening process. This is pivotal in facilitating package delivery for the delivery personnel and package retrieval for the user.

Bin Placement/Retrieval

Allocation of available bin

Regarding the overall bin placement and retrieval logic in our system, we implemented find_open_bin(). This function is central to managing the allocation of bins for new package placements by delivery personnel. It starts by checking if all bins are full. If so, we calculate the time elapsed since each bin last received a package. The bin with the longest elapsed time since the last package was placed is then identified. If this elapsed time exceeds a certain threshold (i.e. the user didn't pick up their package after 50 seconds, in our case), the system sends an email to the user that the time to pick up their package has expired, that they should pick up their package at their nearest USPS store, and this bin opens for new package placement. In scenarios where not all bins are occupied, the system locates the first available (open) bin for the delivery personnel.

In addition to the bin allocation function, we implemented get_package() for efficient package retrieval management. This locates bins containing the user’s package(s), based on the April tag ID associated with the user. Once identified, the system guides users to which bin(s) contains their package(s) for pick-up via a message on the PiTFT.

Throughout this whole process, we track the status of available or unavailable bins via a dictionary called "bin_status", where we update if a bin should be open (no package placed) or closed (package placed which is linked to a user via April tag ID). This status change is physically signified by the bin door opening via a servo, indicating that the bin is now available. Additionally, we send emails to the user based on whether their package has been delivered or when they picked up their package.

The functionalities of find_open_bin() and get_package() are interconnected through various dictionaries resembling a database mapping structure. The "tag_info" dictionary links each April tag (which acts as a shipping label) to all valid users. Based on our availability algorithm, the "bin_AT" dictionary assigns a system-selected bin to a user once the April tag is scanned, managing individual package delivery and pick-up. The "bin_to_gpio" dictionary links each bin to a servo which is controlled by hardware-timed PWM generated by a GPIO pin on the Raspberry Pi, meaning a bin door can open and close via the servo moving up and down. Using the SG90 servo in conjunction with the pigpio library allows for the generation of hardware-timed PWM. The pulse widths for this setup can vary from 500 us (0 degrees) to 2500 us (180 degrees). Due to the mechanical assembly, 500 us keeps the servo vertically up (0 degrees), indicating an open bin door and an available bin status, whereas 1500 us (90 degrees) keeps the bin horizontal, indicating a closed bin door and an unavailable bin status.

When the delivery man places a package in, if he forgot to click the button to close the bin, we've also implemented a timer that automatically closes the bin (after 30 sec) on behalf of the delivery man to ensure the package is secure in the bin.

Multiple pacakges

No packages

When a new package arrives, replace it with the oldest package which is out of valid time range

Automated Emails

We also introduced an email sending functionality into our system. There are three situations where the system will send emails to the users:

- Delivered Alert When the delivery man comes and places a package into one of the bins, an email will be sent to notify the user that their packages have been delivered.

- Picked-up Alert When a valid user successfully picks up their package, the system will send an email to tell the user the package has been picked up.

- Package Expiration Alert When all the bins are filled, the user who didn't pick up their package the longest will get an email that the time to pick up their package has expired and inform them to go to the USPS store to pick it up.

To implement email functionality, we employed a Gmail account as the sender of the system, the SMTP server to take care of the email sending, and wrote a function called "send_email" that is designed for actually sending emails. In this function, we first set up and started an SMTP session, and create an email object specifiying the appropriate sender email, receiver email, and the subject and body of the email via the "MIMEText" module. Then, we used the sender email and corresponding password to log into the SMTP server and finally employ the server to send the email. For the delivered package alert email, when the camera detects a delivery man, the system will set the receiver email based on the result of April tag recognition and a dictionary that maps the April tag ID to the user, and the subject and body, and then the "send_email" function will be called to send the email to the corresponding user. For the picked-up package email, the system will set the receiver email based on detecting a valid user, and send the email once the valid user opens the bin(s) with their package(s). For the package expiration email, the trigger condition will be that all the bins are full and one of the packages has stayed in the bins for too long when the delivery man tries to store packages in the bins.

Sound

In our security system, we used the pygame.mixer.music module to serve a dual purpose through the speaker module. Upon pressing the start button, it plays a specific piece of music, signaling the activation of the camera's detection process. Additionally, it emits a different tune as an alert in case of unauthorized access detection. We chose the pygame.mixer.music module for its ease of use and its support for various audio formats including MP3 and WAV. This setup involves loading the music file when needed, playing it, and then unloading it post-playback to optimize resource usage. The music for the start button is embedded in its callback function, initiating before facial recognition, while a distinct sound is played when detecting an invalid user.

Buttons

Our system features four distinct buttons, each assigned to a specific function. They include a button for initiating facial recognition, another for powering down the entire system, a third for quitting the program, and a fourth for closing a bin once a package is placed. The facial recognition bottom-most button is linked to the callback function; when a falling edge event on this pin is detected, indicating the button's activation, the facial recognition process begins. Similarly, the program shutdown button utilizes a GPIO pin callback when clicked to shut down the system. For the quit button, we implemented a flag that signals the program to exit the program. When a delivery man wants to place a package in a bin, they are prompted to click on the top-most button to close the bin that they placed the package in, which is controlled by polling that button.

Close a bin when button 17 is pressed

Quit the program when button 22 is pressed

Terminate the program when button 23 is pressed

System Reset

In addition, a system reset function is designed to make our system work repeatably. In this function, we reset almost all flag variables used in the program and some global variables used to temporarily store values so that multiple functions can access them together. We also use this to display our welcome page when an action is completed or when a timeout occurs. The logic of resetting the two LEDs is also defined here.

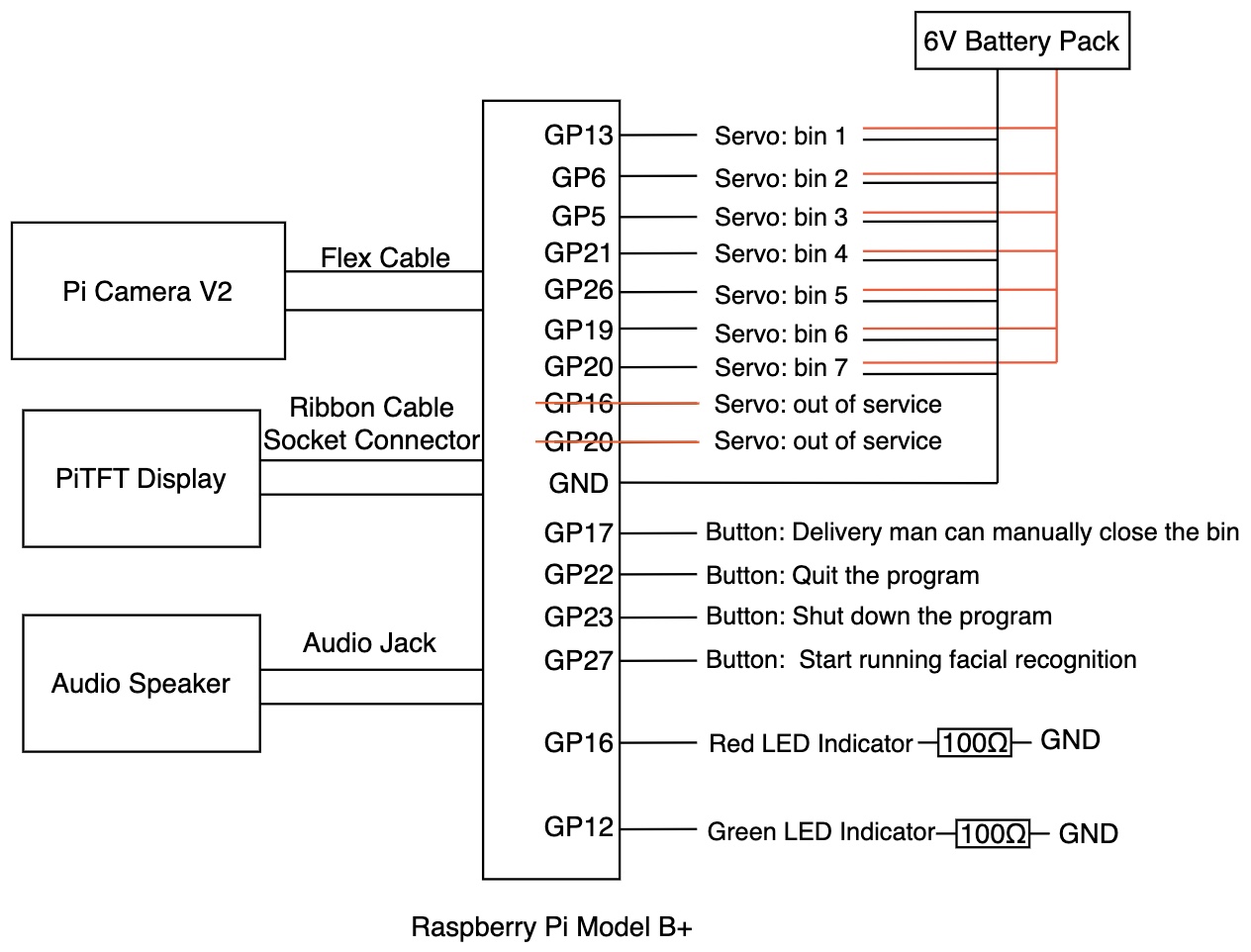

Hardware Design

In relation to the project's objective, a mechanism is required to facilitate the opening and closing of the bin. For this purpose, we utilized the SG90 servo to emulate the function of a lock. To enhance the functionality, we modified each servo arm by attaching half of a popsicle stick to it. This modification enables the servo to move from a vertical to a horizontal position, thereby allowing a bin, fashioned from a piece of cardboard akin to a door, to open and close in conjunction with the servo arm's movements. Owing to the number of pins necessary to control all the servos (nine bins in our case), we opted to use the pigpio library. This Python module operates on Linux and communicates with the pigpio daemon to manage the 0-31 general-purpose input/outputs (GPIO) using hardware-timed Pulse Width Modulation (PWM). Initially, we experimented with the Rpi.GPIO and GPIO Zero libraries. We eventually discarded Rpi.GPIO because it only offers software PWM and restricts PWM generation to four specific pins (12, 13, 18, and 19). Although GPIO Zero supports both software and hardware PWM, we initially overlooked the requirement to implement the Pin class under GPIO Zero for generating hardware PWM. This oversight led us to utilize software PWM, which resulted in significant servo jittering and noticeable time latency. Ultimately, we chose the pigpio module due to its superior PWM resolution and accuracy, attributable to hardware-timed PWM. Additionally, it simplified the process of implementing PWM generation across the 0-31 GPIO pins.

Hardware Schematic

Testing

Our project's testing and verification process is straightforward, as each component yields visible reactions and outcomes when functioning correctly.

Facial Detection Accuracy Test: During testing

We observed occasional inconsistencies in facial detection, particularly with distinguishing between team members Ming and Junze. This could be improved by expanding the image database, thereby enhancing recognition accuracy.

Servos Test

We successfully tested the opening and closing mechanisms of the bin by passing specific Pulse Width Modulation (PWM) to verify the functionality related to the flow of package delivery and pick-up, encompassing all edge cases.

Audio & LED Test

The audio and LED indicators were tested to confirm their functionality. We observed that audio cues were correctly initiated at the start of the face recognition process, and the green LED indicator lit up upon recognizing valid users. Conversely, a different audio signal was played for invalid users, accompanied by the illumination of the red LED indicator.

Overall Logic Test

A comprehensive system test was conducted to evaluate the algorithmic flow and functionality. We tested facial recognition for three categories of individuals: valid users, invalid users, and delivery personnel. We also evaluated the package pick-up logic for users with varying package statuses (no package, a single package, or multiple packages), incorporating corresponding features. For delivery personnel, we tested scenarios with available bins, fully occupied bins with packages outside the protected time range (e.g., 50 sec), and fully occupied bins with all packages within the protected time range, along with their corresponding features.

April Tag Accuracy Test

We verified the system's ability to accurately read April tags through the Pi camera and extract the corresponding linked information. This test ensured that the camera was capable of not only recognizing the tags but also correctly associating them with specific user data. PiTFT Display Test: This functionality was tested by observing various messages displayed on the screen. Our focus was to ensure the flexibility and accuracy of the display features, including specifying the font, color, and text centering. Additionally, we assessed the effectiveness of text wrapping to prevent overflow off-screen and the ability to clear the screen between messages, ensuring no overlap occurred throughout the program's workflow

Results

All aspects of the project performed as planned in the end. Along the way, some difficulties and abnormal behavior resulted from either hardware or software issues, such as thorough bin placement and retrieval logic, power consumption issues arising from the servos which we fixed by using an external battery pack, or even using GPIO18 for powering an LED even though that’s meant for the piTFT backlight. However, these, as well as other tiny bugs, were resolved through algorithmic adjustments and overall software/hardware testing, ensuring the goals outlined in the description were eventually met. The one issue that persisted in the end was inconsistent facial detection readings. For example, sometimes a valid user was detected as a delivery man, or an invalid user was detected as valid. This could’ve been resolved by simply providing more images for each user and the delivery man to ensure the model was stronger in detecting users better. We were able to successfully create a fully working model of a storage system that efficiently manages package delivery and retrieval using a combination of facial recognition, AprilTag scanning, the implementation of available/unavailable bins, Pygame messages on PiTFT screen, LED and audio indicators, and automated emails.

Conclusion & Future Work

Our project has not only demonstrated a successful model of a technology-integrated storage system but has also redefined the interaction between users and their stored items. By incorporating facial and AprilTag recognition, along with timely email notifications, we've crafted a system that adds a layer of smart, intuitive interaction with packages.

We not only achieve the set objectives but also open new avenues for future enhancements. Right now, we only send email alerts to the users; but we could also send alerts to the delivery man. This could include indicating to the delivery man that all bins are full within a system and all users are still good with their pickup time. We could create a mobile app for the delivery man to see areas of dropoffs (similar to Uber) and for users to track the statuses of their packages. Instead of using servos, we could also incorporate locks.

Work Distribution

Project group picture

Joash Shankar

jcs556@cornell.edu

Worked on face detection, logic for picking up and placing packages in bins, and overall software testing

Ming He

hh759@cornell.edu

Worked on april tag detection, servo hardware setup, logic for picking up and placing packages in bins, and overall software testing

Junze Zhou

jz2275@cornell.edu

Worked on email messaging system, LEDs, and system reset functionalities.

Parts List

- Raspberry Pi - Provided in lab

- Raspberry Pi Camera V2 - Provided in lab

- 1x red LED, 1x green LED, 2x 100 Ω Resistors, 1x Battery pack, 4x AA batteries, 1x Breadboard, and Wires - Provided in lab

- Cardboard, tape, popsicle sticks - Provided in lab

- 9x servos - Provided in lab

- Speakers - Provided in lab

References

PiCamera DocumentationSG90 Servo Jitter

SG90 Servo Documentation

OpenCV Library

Pigpio Library

RPi GPIO & gpiozero Documentation

Multiprocessing Documentation

MQTT Server Documentation

bashrc Setup from Lab3

Code Appendix

Click here to view all files used for projectSmartStorageSystem.py

'''

ECE 5725 Final Project: Smart Storage System

Team: Joash Shankar (jcs556), Ming He (hh759), Junze Zhou (jz2275)

Date: Dec 8th, 2023

SmartStorageSystem.py

Description: This system handles the logic like in a real apartment system. We handle facial recognition for valid/invalid users, april tag scanning for packages linked to users, email notifications sending to users, LEDs/sound to act as an indicator if the user is valid or not, and servos to open the bins.

'''

import RPi.GPIO as GPIO

import pygame

from pygame.locals import *

import textwrap

import time

import smtplib

from email.mime.text import MIMEText

import face_recognition

import imutils

from imutils.video import VideoStream

from imutils.video import FPS

import pickle

import cv2

import apriltag

from picamera.array import PiRGBArray

from picamera import PiCamera

import pigpio

import os

# GPIOs for servos: 5 6 13 19 20 21 26

# GPIOs for LEDs: 12 16

# Buttons used: 17 22 23 27

os.putenv('SDL_VIDEODRIVER', 'fbcon')

os.putenv('SDL_FBDEV', '/dev/fb0') # display on piTFT

pygame.init()

pygame.mouse.set_visible(False) # Turn on/off mouse cursor

pathUser = "/home/pi/detection.mp3" # stores audio file corresponding to doorbell press (plays as face detection loads)

pathInvalid = "/home/pi/invalid_user.mp3" # stores audio file corresponding to invalid user being scanned

# Mapping of april tag IDs to user information

tag_info = {

1: "User: Joash",

2: "User: Ming",

3: "User: Bob", # used as a placeholder for demonstrating all bins are full so we don't overload servos assigned to Joash or Ming for the demo

4: "",

5: "",

6: "",

7: ""

# 8: "",

# 9: ""

# Add more tags as needed

}

# Mapping of bin IDs to april tag IDs

# 3 is assigned to Bob for demo

bin_AT = {

1: 0,

2: 3,

3: 2,

4: 3,

5: 0,

6: 3,

7: 3

# 8: 3,

# 9: 3

}

# Mapping of bin numbers to GPIO pins

bin_to_gpio = {

1: 13,

2: 6,

3: 5,

4: 21,

5: 26,

6: 19,

7: 20

# 8: 20,

# 9: 20

}

# Keep track of bin status (open/close)

# bins 1 and 5 starts open and everything else closed for demo

bin_status = {

1: "open",

2: "close",

3: "close",

4: "close",

5: "open",

6: "close",

7: "close"

# 8: "close",

# 9: "close"

}

sys_start_time = time.time()

# Mapping of bin numbers to the time a package was placed in it

# (we hard coded this list for demo, to demonstrate when all bins are full)

# time.time is used since we only use bin 1, 3, 5 for the demo. time.time is updating the package time so that

# whenever the system tries to find the older packages that are also out of valid time range, bins 2, 4, 6, 7 will be ignored.

# sys_start_time is used to properly indicate time, so that it can update the proper time when a package was dropped.

bin_package_time = {

1: sys_start_time,

2: time.time(),

3: sys_start_time,

4: time.time(),

5: sys_start_time,

6: time.time(),

7: time.time()

# 8: time.time(),

# 9: time.time()

}

# Video stream object

vs = None

# Used for pygame init screen

screen = None

# Flags

pkg_Delivered = False # track if package has been delivered

pkg_Picked = False # track if package had been picked up

email_sent = False # check if an email notif has been sent

AT_detect_start = False # indicate start of april tag detection

out_time = False # indicate if a timeout occurred during facial recognition

delivery_man_detected = False # indicate if a delivery person has been detected

invalid_user = False # indicate unknown user

exit_flag = False # indicate if the program needs to terminate

no_bin_avail = False # indicate message that no bin is available

# Servo global variables

servos = {} # store servo objects for each bin

available = 500 # servo pulse width to open a bin

unavailable = 1500 # servo pulse width to close a bin

# Other global variables

detect_cam_time_limit = 60 # time limit for facial recognition before timeout

bin_global = 0 # keep track of current bin being accessed

tag_id = 0 # store ID of a detected april tag

AT_info = "" # store info related to a detected april tag

# Find open bin to place user's package in

def find_open_bin():

global bin_status

global bin_AT

global bin_global

global tag_id

global bin_package_time

global screen

global tag_info

global no_bin_avail

# check if all bins are full

all_bins_full = all(status == "close" for status in bin_status.values())

if all_bins_full:

curr_time = time.time() # get current time

time_elapsed = {}

# Loop through each bin to calculate the time elapsed since the last package was placed

for bin_number, package_time in bin_package_time.items():

if package_time is not None:

time_elapsed[bin_number] = curr_time - package_time

else:

time_elapsed[bin_number] = 0

# determine the bin to open based on the bin with most time elapsed

bin_to_open = max(time_elapsed, key=time_elapsed.get)

# have bin open if the time elapsed is greater than 50 seconds

if(time_elapsed[bin_to_open] > 50):

display_message(screen, f"Take the previous package back to USPS. Bin {bin_to_open} will open for the new package. Press top-most button to close the bin!")

expired_tag_id = bin_AT[bin_to_open] # get tag ID of expired package

expired_name = tag_info[expired_tag_id] # get name associated with tag ID

# Determine user's expired package email

if (expired_name == "User: Joash"):

receiver_email = "jcs556@cornell.edu"

elif (expired_name == "User: Ming"):

receiver_email = "hh759@cornell.edu"

elif (expired_name == "User: Bob"):

receiver_email = "mingyedie@gmail.com"

subject = "Package Expiration Alert"

body = f"Dear {expired_name[6:]},\n\nYour time to pick up your package is up. Please pick it up at your closest USPS!\n\nSmart Storage System"

open_bin(bin_to_open)

time.sleep(0.2)

send_email(subject, body, receiver_email)

else:

display_message(screen, "No bin is available.")

no_bin_avail = True

return

else:

# if not all bins are full, find the first available bin

for bin_number, status in bin_status.items():

if status == "open":

bin_to_open = bin_number

display_message(screen, "Hello Delivery Man, you may place the package in Bin " + str(bin_number) + " , then press the top-most button to close the bin.")

break

# Update the bin status, package time, and tag ID for the opened bin

bin_status[bin_to_open] = "close"

bin_package_time[bin_to_open] = time.time() # update the time when new package is placed

bin_AT[bin_to_open] = tag_id

bin_global = bin_to_open

# Handle bin with user's package when picked up

def get_package(user_name):

global tag_info

global bin_AT

global bin_status

global screen

bin_pkgs = [] # store bin number with user's package

tag_id_for_pkg = [k for k, v in tag_info.items() if v[6:] == user_name][0] # get april tag id associated with user

# Find bin containing user's package

for k, v in bin_AT.items():

if v == tag_id_for_pkg:

bin_pkgs.append(k) # store bin number when match is found

# Logic to handle picking up package

if bin_pkgs:

if (len(bin_pkgs) > 1): # if user has packages in more than one bin

many_bins_str = ', '.join(map(str, bin_pkgs)) # concat bin numbers into a string

display_message(screen, "Hey " + user_name + ", you may collect your packages in bins "+many_bins_str+"!")

else: # if user has package in one bin

display_message(screen, "Hey " + user_name + ", you may collect your package at bin "+str(bin_pkgs[0])+"!")

for bin_number in bin_pkgs: # open each bin containing the user's packages and update their status

open_bin(bin_number)

bin_status[bin_number] = "open" # reset bin status after package picked up

bin_AT[bin_number] = 0 # reset bin_AT to indicate no package associated with bin

else:

display_message(screen, "Hey " + user_name + ", you have no packages.")

# Initialize servos

def initialize_servos():

global servos

global bin_to_gpio

for bin_number, pin in bin_to_gpio.items(): # iterate through each bin and its corresponding GPIO pin

servos[bin_number] = pigpio.pi() # init pigpio object for each bin

servos[bin_number].set_mode(pin, pigpio.OUTPUT) # set GPIO pin as an output

close_bin(bin_number)

time.sleep(0.1) # short delay to ensure servo is stable

# used for demo

# open_bin(1)

# time.sleep(0.1)

# open_bin(5)

# time.sleep(0.1)

# Stop servos

def stop_servos():

global servos

global bin_to_gpio

for bin_number, pin in bin_to_gpio.items(): # iterate through each bin

servos[bin_number].stop() # stop servo associated with bin

time.sleep(0.1) # short delay to ensure servo is stable

# Open bin (turn servo 90 degrees)

def open_bin(bin_number):

global servos

global bin_to_gpio

pin = bin_to_gpio[bin_number] # get GPIO associated with bin number

servos[bin_number].set_servo_pulsewidth(pin, available) # set servo pulse width to open bin (500)

# Close bin (turn servo 0 degrees)

def close_bin(bin_number):

global servos

global bin_to_gpio

pin = bin_to_gpio[bin_number] # get GPIO associated with bin number

servos[bin_number].set_servo_pulsewidth(pin, unavailable) # set servo pulse width to close bin (1500)

# User/Delivery Man doorbell button

def GPIO27_callback(channel):

global screen

display_message(screen, "User detected. Processing face recognition...")

pygame.mixer.music.load(pathUser) # load audio file

pygame.mixer.music.play(loops=1) # play audio file

if not pygame.mixer.music.get_busy(): # wait until audio is done playing

pygame.mixer.music.unload()

process_face_detection(user=True) # start face recognition

# Delivery Man button to indicate they placed package in bin

def close_bin_after_pkg(): # GPIO17

global bin_global

global delivery_man_detected

close_bin(bin_global)

delivery_man_detected = False # reset flag

#cv2.destroyAllWindows()

# April tag detection

def AprilTag_scan():

global vs

global AT_info

global AT_detect_start

global screen

global out_time

global bin_global

global delivery_man_detected

global detect_cam_time_limit

global tag_id

temp_count = 0

temp_bool = True

start_time_AT = time.time()

display_message(screen, "Scanning for AprilTag...")

while True:

if temp_bool:

frame = vs.read()

# Initialize AprilTag detector with a tag family

options = apriltag.DetectorOptions(families='tag16h5')

detector = apriltag.Detector(options)

# Convert to grayscale for detection

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# Detect AprilTags in the image

results = detector.detect(gray)

for r in results:

# Draw the bounding boxes around the detected april tag

(ptA, ptB, ptC, ptD) = r.corners

for i in range(4):

pt1 = (int(r.corners[i][0]), int(r.corners[i][1]))

pt2 = (int(r.corners[(i + 1) % 4][0]), int(r.corners[(i + 1) % 4][1]))

cv2.line(frame, pt1, pt2, (0, 255, 0), 2)

# Retrieve information based on the tag ID

tag_id = r.tag_id

AT_info = tag_info.get(tag_id, "Unknown Tag")

# Show the output frame - uncomment if you want to see bounding box of facial detection live on monitor

# cv2.imshow("AprilTag Scan", frame)

# key = cv2.waitKey(1) & 0xFF

# camera recognition timeout check

if (time.time() - start_time_AT > detect_cam_time_limit):

display_message(screen, "Timing out... No AprilTag detected!")

out_time = True

break

# Check if user's associated april tag is detected and open bin for user

if ("User:" in AT_info):

find_open_bin()

temp_bool = False

# cv2.destroyAllWindows()

if no_bin_avail == True:

break

else:

if (not GPIO.input(17)):

if bin_global != 0:

time.sleep(0.2)

display_message(screen, f"Bin {bin_global} is closing!")

close_bin_after_pkg()

break

time.sleep(0.1)

if delivery_man_detected and bin_global != 0 and time.time() - start_time_AT > 30: # close a bin if delivery man is detected and specific bin was open after a period of time

display_message(screen, f"Bin {bin_global} is closing!")

close_bin(bin_global)

bin_package_time[bin_global] = time.time()

delivery_man_detected = False # reset flag

break

# Face detection

def process_face_detection(user):

global vs

global pkg_Delivered

global pkg_Picked

global AT_detect_start

global email_sent

global AT_info

global screen

global led

global delivery_man_detected

global invalid_user

global out_time

global detect_cam_time_limit

# At the start of face recognition

display_message(screen, "Scanning in progress, please stand by...")

start_time = time.time()

# Load face encodings and initialize the video stream

data = pickle.loads(open("/home/pi/SmartStorageSystem/encodings.pickle", "rb").read())

# Start the FPS counter

fps = FPS().start()

# Capture and process frames in a loop

while True:

frame = vs.read()

frame = imutils.resize(frame, width=500)

boxes = face_recognition.face_locations(frame)

encodings = face_recognition.face_encodings(frame, boxes)

names = []

name = ""

# Loop over the facial embeddings

for encoding in encodings:

matches = face_recognition.compare_faces(data["encodings"], encoding)

name = "Unknown" # if face is not recognized

# Check to see if we have found a match

if True in matches:

# Find the indexes of all matched faces then init a dict to count each face

matchedIdxs = [i for (i, b) in enumerate(matches) if b]

counts = {}

# Count each recognized face

for i in matchedIdxs:

name = data["names"][i]

counts[name] = counts.get(name, 0) + 1

# Determine the recognized face with the largest number of votes

name = max(counts, key=counts.get)

receiver_email = ""

subject = ""

body = ""

# Action based on the person's identity

if user and (name == "Joash" or name == "Ming"):

# User recognized, open the user's bin

email_sent = True

GPIO.output(12, GPIO.HIGH)

get_package(name)

time.sleep(3)

elif user and name == "Delivery Man":

AT_detect_start = True

GPIO.output(12, GPIO.HIGH)

delivery_man_detected = True

else:

# Access denied / unrecognized person

GPIO.output(16, GPIO.HIGH)

pygame.mixer.music.load(pathInvalid) # load audio file

pygame.mixer.music.play(loops=1) # play audio file

if not pygame.mixer.music.get_busy(): # wait until audio is done playing

pygame.mixer.music.unload()

display_message(screen, "Access denied. Unrecognized user.")

time.sleep(2)

# update the list of names

names.append(name)

# loop over the recognized faces

for ((top, right, bottom, left), name) in zip(boxes, names):

# draw the predicted face name on the image - color is in BGR

cv2.rectangle(frame, (left, top), (right, bottom), (0, 255, 225), 2)

y = top - 15 if top - 15 > 15 else top + 15

cv2.putText(frame, name, (left, y), cv2.FONT_HERSHEY_SIMPLEX, .8, (0, 255, 255), 2)

# display the image to our monitor - uncomment if you want to see bounding box of facial detection live on monitor

# cv2.imshow("Facial Recognition is Running", frame)

# key = cv2.waitKey(1) & 0xFF

# camera recognition timeout check

if (time.time() - start_time > detect_cam_time_limit):

display_message(screen, "Timing out... No user detected!")

out_time = True

break

if (name == "Unknown"):

invalid_user = True

break

if (email_sent == True):

# sending an email

if name == "Joash":

receiver_email = "jcs556@cornell.edu"

elif name == "Ming":

receiver_email = "hh759@cornell.edu"

elif name == "Bob":

receiver_email = "mingyedie@gmail.com"

subject = "Package Picked Up Alert"

body = f"Dear {name},\n\nyou've picked up your package!\n\nSmart Storage System"

display_message(screen, f"Email notification sent to {name}.")

send_email(subject, body, receiver_email)

pkg_Picked = True

break

if AT_detect_start == True:

AprilTag_scan()

# send email to respective user after apriltag is scanned

if (AT_info == "User: Joash"):

receiver_email = "jcs556@cornell.edu"

elif (AT_info == "User: Ming"):

receiver_email = "hh759@cornell.edu"

elif (AT_info == "User: Bob"):

receiver_email = "mingyedie@gmail.com"

subject = "Package Delivery Alert"

body = f"Dear {AT_info[6:]},\n\nYour package has been delivered!\n\nSmart Storage System"

send_email(subject, body, receiver_email)

pkg_Delivered = True

break

# quit when 'q' key is pressed

# if key == ord("q"):

# break

# update the FPS counter

fps.update()

# stop the timer and display FPS information

fps.stop()

# cv2.destroyAllWindows()

# Send email to users

def send_email(subject, body, receiver_email):

# Set up the SMTP server and port

smtp_server = 'smtp.gmail.com'

port = 587

# Sender and receiver email addresses

sender_email = 'jzz712846@gmail.com'

# Email credentials

password = 'iyzh pctt gqwa drmj'

# Create a MIMEText object to represent the email

message = MIMEText(body)

message['From'] = sender_email

message['To'] = receiver_email

message['Subject'] = subject

# Start the SMTP session

server = smtplib.SMTP(smtp_server, port)

server.starttls() # Start TLS encryption

server.login(sender_email, password) # Log in to the SMTP server

# Send the email

server.sendmail(sender_email, receiver_email, message.as_string())

# Close the SMTP session

server.quit()

# Initializes and returns the main display surface

def init_pygame_display():

pygame.display.init()

size = (320, 240)

screen_init = pygame.display.set_mode(size) # create window of specified size

return screen_init

# Displays message on Pygame surface (wraps and centers text)

def display_message(screen, message, font_size=28, color=(255, 255, 255)):

screen.fill((0, 0, 0)) # clear screen

pygame.display.update()

font = pygame.font.Font(None, font_size)

# Wrap the text

wrapped_text = textwrap.wrap(message, width=30)

# Starting Y position

start_y = (240 - (font_size * len(wrapped_text))) // 2 # center the block of text vertically

# Render and display each line of text

for i, line in enumerate(wrapped_text):

text_surface = font.render(line, True, color)

rect = text_surface.get_rect(center=(160, start_y + i * font_size))

screen.blit(text_surface, rect)

pygame.display.update() # show text

# Used as a failsafe quit

def GPIO22_callback(channel):

global exit_flag

exit_flag = True

# Used as a shutdown button

def GPIO23_callback(channel):

global screen

display_message(screen, "Shutting down the system. Have a great day!")

time.sleep(2)

os.system('sudo shutdown -h now')

# Resets the system to its initial state by modifying global variables and resetting LED

def system_reset(init = False):

global pkg_Delivered

global pkg_Picked

global AT_info

global email_sent

global AT_detect_start

global out_time

global led

global screen

global vs

global invalid_user

global bin_global

if (not init):

pkg_Delivered = False

pkg_Picked = False

AT_info = ""

email_sent = False

AT_detect_start = False

out_time = False

invalid_user = False

bin_global = 0

display_message(screen, "Welcome to the Smart Storage System. Click on the doorbell (bottom-most button) if you're a valid user.")

# reset LEDs

GPIO.output(16, GPIO.LOW)

GPIO.output(12, GPIO.LOW)

# Main loop

def main():

# Display initial message

global screen

global vs

global pkg_Delivered

global led

global exit_flag

global pkg_Picked

global out_time

global invalid_user

# init pygame font and show welcome message

pygame.font.init()

screen = init_pygame_display()

display_message(screen, "Welcome to the Smart Storage System. Click on the doorbell (bottom-most button) if you're a valid user.")

exit_flag = False

initialize_servos()

# GPIO setup

GPIO.setmode(GPIO.BCM)

GPIO.setup(17, GPIO.IN, pull_up_down=GPIO.PUD_UP) # push button

GPIO.setup(27, GPIO.IN, pull_up_down=GPIO.PUD_UP) # push button

GPIO.add_event_detect(27, GPIO.FALLING, callback=GPIO27_callback, bouncetime=300)

GPIO.setup(22, GPIO.IN, pull_up_down=GPIO.PUD_UP) # push button

GPIO.add_event_detect(22, GPIO.FALLING, callback=GPIO22_callback, bouncetime=300)

GPIO.setup(23, GPIO.IN, pull_up_down=GPIO.PUD_UP) # push button

GPIO.add_event_detect(23, GPIO.FALLING, callback=GPIO23_callback, bouncetime=300)

# Pygame setup for sound

pygame.mixer.init()

# Initialize the video stream here and let it warm up

vs = VideoStream(usePiCamera=True, resolution = (640, 480), framerate = 32).start()

time.sleep(2.0)

# LEDs setup

GPIO.setup(16, GPIO.OUT)

GPIO.setup(12, GPIO.OUT)

GPIO.output(16, GPIO.LOW)

GPIO.output(12, GPIO.LOW)

system_reset(True)

# Main loop to keep the program running

while not exit_flag:

if (pkg_Delivered or pkg_Picked or out_time or invalid_user):

system_reset()

time.sleep(0.5)

GPIO.cleanup()

pygame.mixer.quit()

stop_servos()

vs.stop()

display_message(screen, "Quitting the system...")

time.sleep(1)

if __name__ == "__main__":

main()

headshots_picam.py

'''

ECE 5725 Final Project: Smart Storage System

Team: Joash Shankar (jcs556), Ming He (hh759), Junze Zhou (jz2275)

Date: Dec 8th, 2023

headshots_picam.py

Description: Capture images from RPi camera and save them to a dataset directory, which signifies known users.

'''

import cv2

from picamera import PiCamera

from picamera.array import PiRGBArray

name = 'Name' # replace with name of user you want to add

cam = PiCamera()

cam.resolution = (512, 304)

cam.framerate = 10

rawCapture = PiRGBArray(cam, size=(512, 304))

img_counter = 0

while True:

for frame in cam.capture_continuous(rawCapture, format="bgr", use_video_port=True):

image = frame.array

cv2.imshow("Press Space to take a photo", image)

rawCapture.truncate(0)

k = cv2.waitKey(1)

rawCapture.truncate(0)

if k%256 == 27: # ESC pressed

break

elif k%256 == 32: # SPACE pressed

img_name = "dataset/"+ name +"/image_{}.jpg".format(img_counter) # add taken img to dataset folder matching the already-created name of the user

cv2.imwrite(img_name, image)

print("{} written!".format(img_name))

img_counter += 1 # incrementing so you can take multiple images of user

if k%256 == 27: # ESC pressed

print("Escape hit, closing...")

break

cv2.destroyAllWindows()

train_model.py

#! /usr/bin/python

'''

ECE 5725 Final Project: Smart Storage System

Team: Joash Shankar (jcs556), Ming He (hh759), Junze Zhou (jz2275)

Date: Dec 8th, 2023

train_model.py

Description: Load images from dataset that we passed in to ensure valid users are detected in SmartStorageSystem.py. Based on name, detect face in the image and compute their facial encodings.

'''

from imutils import paths

import face_recognition

import pickle

import cv2

import os

# our images are located in the dataset folder

print("[INFO] start processing faces...")

imagePaths = list(paths.list_images("dataset"))

# initialize the list of known encodings and known names

knownEncodings = []

knownNames = []

# loop over the image paths

for (i, imagePath) in enumerate(imagePaths):

# extract the person name from the image path

print("[INFO] processing image {}/{}".format(i + 1, len(imagePaths)))

name = imagePath.split(os.path.sep)[-2]

# load the input image and convert it from RGB (OpenCV ordering) to dlib ordering (RGB)

image = cv2.imread(imagePath)

rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# detect the (x, y)-coordinates of the bounding boxes corresponding to each face in the input image

boxes = face_recognition.face_locations(rgb, model="hog")

# compute the facial embedding for the face

encodings = face_recognition.face_encodings(rgb, boxes)

# loop over the encodings

for encoding in encodings:

# add each encoding + name to our set of known names and encodings

knownEncodings.append(encoding)

knownNames.append(name)

# dump the facial encodings + names to disk

print("[INFO] serializing encodings...")

data = {"encodings": knownEncodings, "names": knownNames}

f = open("encodings.pickle", "wb")

f.write(pickle.dumps(data))

f.close()